728x90

반응형

- LayoutLMv3 to pre-train multimodal Transformers for Document AI with unified text and image masking

- LayoutLMv3 is pre-trained with a word-patch alignment objective to learn cross-modal alignment by predicting whether the corresponding image patch of a text word is masked.

- Contribution

- LayoutLMv3 : first multimodal model that doesn’t rely on a pre-trained CNN or Faster R-CNN backbone → save parameters, eliminate region annotations.

- pre-trained task : MLM, MIM, WPA(Word-Patch Alignment)

- LayoutLMv3 : both text-centric and image-centric Document AI tasks

- SOTA 달성

- form understanding(text-centric) : FUNSD

- receipt understanding : CORD

- document VQA : DocVQA

- document image classification(image-centric) : RVL-CDIP

- document layout detection : LayNet

⇒ LayoutLMv3는 두 개의 task 모두 최적화된 model

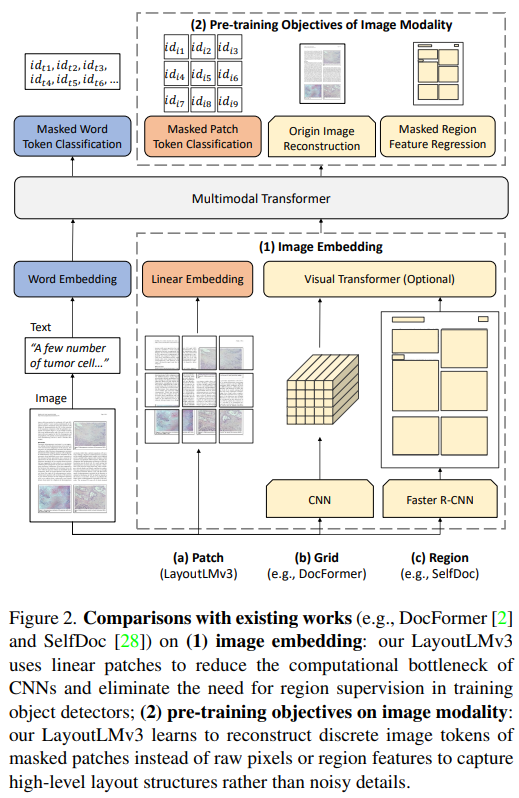

- 타 모델(DocFormer, SelfDoc)과 비교

- DocFormer : CNN 기반 image feature

- SelfDoc : masked region 기반 image feature

- LayoutLMv3 :

- CNN을 안 쓰고 image를 patch단위로 나눠서 linear embedding

- ⇒ CNN의 bottleneck 문제를 해결하기 위함.

- 세부적인 noisy한 디테일을 학습하기보다는 고수준의 layout기반의 구조를 학습하기 위해서, raw pixel, region feature기반의 image처리보다는 masked patches기반의 image token을 예측하는 방식으로 학습하는 것을 고안.

- LayoutLMv3 pre-trained task

- image-text multi-modal pre-training task

- MLM(Masked Language Modeling) / MIM(Masked Image Modeling)

- Masked word token, Masked Patch token(image)를 예측하는 pre-trained task

- MIM(from BEiT) : DALL-E Tokenizer로 이미지를 discrete token으로 변환, image patch 중 일부 masking, 예측

- WPA(Word-Patch Alignment)

- cross-modal alignment 학습을 위해 마스킹된 text word에 맞는 image patch를 예측

- “SEG”는 segment-level position , “[CLS]”, “[MASK]”, “[SEP]”, “[SPE]” : special tokens

- input : concat X-Y ($Y = y_{1:L} / X = x_{1:M}$)

- Text Embedding : word embedding + position embedding

- word embedding : by RoBERTa word embedding matrix

- position embedding

- 1D position : index of tokens within the text sequence

- 2D layout position : bounding box coordinates of the text sequence

- normalize layers to embed x-axis, y-axis, width, height(like LayoutLM)

- LayoutLM, LMv2는 word-level layout position을 채택, 각 단어의 좌표값이 존재

- text input

- tokenize text sequence with Byte-Pair Encoding(BPE) max length =512

- [CLS] : begin , [SEP] : end, [PAD] : padding

- Image Embedding : ViT, ViLT처럼 linear projection image feature 사용

- $I \in R^{H×X×C}$ ⇒ Patch 단위$(P×P)$로 split

- 각 image patch linear projection ($M=HW/P^2$), D차원으로 flatten.

- add 1D position embedding to each patch

- $C × H × W = 3×224×224, P=16, M=196$

- LayoutLMv3-base : 12 Transformer encoder layer with 12 head self-attention / hidden size D = 768 / FFN size 3,072

- LayoutLMv3-large : 24 Transformer encoder layer with 16 head self-attention/ hidden size D = 1024 / FFN size 4,096

- pre-train : Adam Optimizer, batch=2048 for 500,000 steps. weight decay = 1e-2 / (B1, B2) = (0.9 , 0.98) / vocab size 8192 / (base) lr=1e-4 / warm up lr 4.8% steps. / (large) lr=5e-5, 10% steps warmup

- Conclusion

⇒ LayoutLMv3는 ViT를 참고해서 Image feature를 CNN없이 Linear projection input으로 제공하는 방식이 parameter 수도 줄고, 세세한 pixel단위에 집중하는게 아니라 상위 레벨에서 layout을 학습하는 것이라서, 더 좋은 성능을 갖는다 얘기함.

728x90

반응형